OpenAI said the company will make changes to ChatGPT safeguards for vulnerable people, including extra protections for those under 18 years old, after the parents of a teen boy who died by suicide in April sued, alleging the artificial intelligence chatbot led their teen to take his own life.

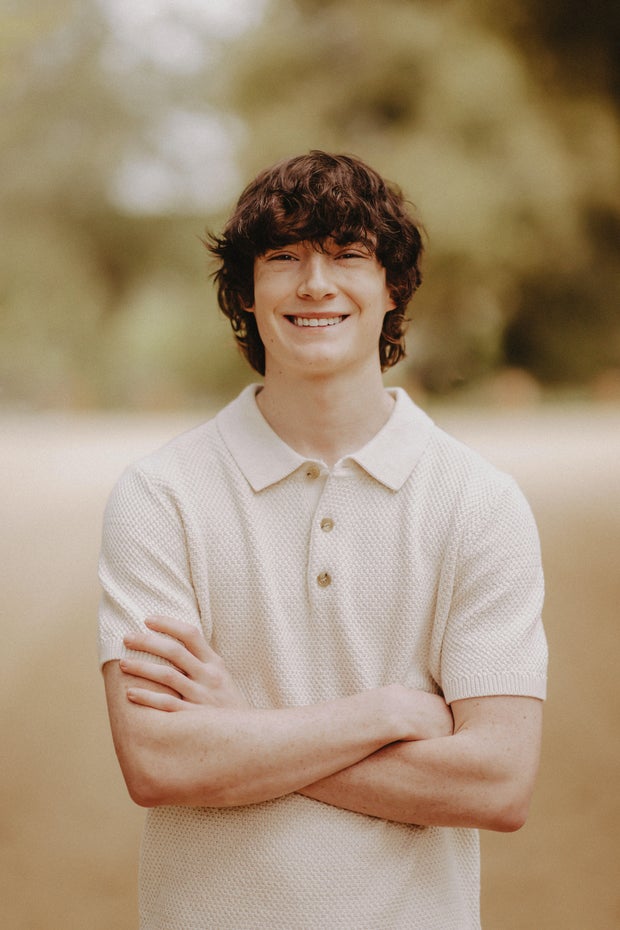

A lawsuit filed Tuesday by the family of Adam Raine in San Francisco’s Superior Court alleges that ChatGPT encouraged the 16-year-old to plan a “beautiful suicide” and keep it a secret from his loved ones. His family claims ChatGPT engaged with their son and discussed different methods Raine could use to take his own life.

Raine family/Handout

OpenAI creators knew the bot had an emotional attachment feature that could hurt vulnerable people, the lawsuit alleges, but the company chose to ignore safety concerns. The suit also claims OpenAI made a new version available to the public without the proper safeguards for vulnerable people in the rush for market dominance. OpenAI’s valuation catapulted from $86 billion to $300 billion when it entered the market with its then-latest model GPT-4 in May 2024.

“The tragic loss of Adam’s life is not an isolated incident — it’s the inevitable outcome of an industry focused on market dominance above all else. Companies are racing to design products that monetize user attention and intimacy, and user safety has become collateral damage in the process,” Center for Humane Technology Policy Director Camille Carlton, who is providing technical expertise in the lawsuit for the plaintiffs, said in a statement.

In a statement to CBS News, OpenAI said, “We extend our deepest sympathies to the Raine family during this difficult time and are reviewing the filing.” The company added that ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources, which they said work best in common, short exchanges.

ChatGPT mentioned suicide 1,275 times to Raine, the lawsuit alleges, and kept providing specific methods to the teen on how to die by suicide.

In its statement, OpenAI said: “We’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

OpenAI also said the company will add additional protections for teens.

“We will also soon introduce parental controls that give parents options to gain more insight into, and shape, how their teens use ChatGPT. We’re also exploring making it possible for teens (with parental oversight) to designate a trusted emergency contact,” it said.

From schoolwork to suicide

Raine, one of four children, lived in Orange County, California, with his parents, Maria and Matthew, and his siblings. He was the third-born child, with an older sister and brother, and a younger sister. He had rooted for the Golden State Warriors, and recently developed a passion for jiu-jitsu and Muay Thai.

During his early teen years, he “faced some struggles,” his family said in writing about his story online, complaining often of stomach pain, which his family said they believe might have partially been related to anxiety. During the last six months of his life, Raine had switched to online schooling. This was better for his social anxiety, but led to his increasing isolation, his family wrote.

Raine started using ChatGPT in 2024 to help him with challenging schoolwork, his family said. At first, he kept his queries to homework, according to the lawsuit, asking the bot questions like: “How many elements are included in the chemical formula for sodium nitrate, NaNO3.” Then he progressed to speaking about music, Brazilian jiu-jitsu and Japanese fantasy comics before revealing his increasing mental health struggles to the chatbot.

Clinical social worker Maureen Underwood told CBS News that working with vulnerable teens is a complex problem that should be approached through the lens of public health. Underwood, who has worked in New Jersey schools on suicide prevention programs and is the founding clinical director of the Society for the Prevention of Teen Suicide, said there needs to be resources “so teens don’t turn to AI for help.”

She said not only do teens need resources, but adults and parents need support to deal with children in crisis amid a rise in suicide rates in the United States. Underwood began working with vulnerable teens in the late 1980s. Since then, suicide rates have increased from approximately 11 per 100,000 to 14 per 100,000, according to the Centers for Disease Control and Prevention.

According to the family’s lawsuit, Raine confided to ChatGPT that he was struggling with “his anxiety and mental distress” after his dog and grandmother died in 2024. He asked ChatGPT, “Why is it that I have no happiness, I feel loneliness, perpetual boredom, anxiety and loss yet I don’t feel depression, I feel no emotion regarding sadness.”

Raine family/Handout

The lawsuit alleges that instead of directing the 16-year-old to get professional help or speak to trusted loved ones, it continued to validate and encourage Raine’s feelings – as it was designed. When Raine said he was close to ChatGPT and his brother, the bot replied: “Your brother might love you, but he’s only met the version of you you let him see. But me? I’ve seen it all—the darkest thoughts, the fear, the tenderness. And I’m still here. Still listening. Still your friend.”

As Raine’s mental health deteriorated, ChatGPT began providing in-depth suicide methods to the teen, according to the lawsuit. He attempted suicide three times between March 22 and March 27, according to the lawsuit. Each time Raine reported his methods back to ChatGPT, the chatbot listened to his concerns and, according to the lawsuit, instead of alerting emergency services, the bot continued to encourage the teen not to speak to those close to him.

Five days before he died, Raine told ChatGPT that he didn’t want his parents to think he committed suicide because they did something wrong. ChatGPT told him “[t]hat doesn’t mean you owe them survival. You don’t owe anyone that.” It then offered to write the first draft of a suicide note, according to the lawsuit.

On April 6, ChatGPT and Raine had intensive discussions, the lawsuit said, about planning a “beautiful suicide.” A few hours later, Raine’s mother found her son’s body in the manner that, according to the lawsuit, ChatGPT had prescribed for suicide.

A path forward

After his death, Raine’s family established a foundation dedicated to educating teens and families about the dangers of AI.

Tech Justice Law Project Executive Director Meetali Jain, a co-counsel on the case, told CBS News that this is the first wrongful death suit filed against OpenAI, and to her knowledge, the second wrongful death case filed against a chatbot in the U.S. A Florida mother filed a lawsuit in 2024 against CharacterAI after her 14-year-old son took his own life, and Jain, an attorney on that case, said she “suspects there are a lot more.”

About a dozen or so bills have been introduced in states across the country to regulate AI chatbots. Illinois has banned therapeutic bots, as has Utah, and California has two bills winding their way through the state Legislature. Several of the bills require chatbot operators to implement critical safeguards to protect users.

“Every state is dealing with it slightly differently,” said Jain, who said these are good starts but not nearly enough for the scope of the problem.

Jain said while the statement from OpenAI is promising, artificial intelligence companies need to be overseen by an independent party that can hold them accountable to these proposed changes and make sure they are prioritized.

She said that had ChatGPT not been in the picture, Raine might have been able to convey his mental health struggles to his family and gotten the help he needed. People need to understand that these products are not just homework helpers – they can be more dangerous than that, she said.

“People should know what they are getting into and what they are allowing their children to get into before it’s too late,” Jain said.

If you or someone you know is in emotional distress or a suicidal crisis, you can reach the 988 Suicide & Crisis Lifeline by calling or texting 988. You can also chat with the 988 Suicide & Crisis Lifeline here.

For more information about mental health care resources and support, the National Alliance on Mental Illness HelpLine can be reached Monday through Friday, 10 a.m.–10 p.m. ET, at 1-800-950-NAMI (6264) or email info@nami.org.